Common task method

Alone we can do so little, together we can do so much.

— Helen Keller

The Challenge

Despite advances in frontier models, we have yet to see this progress fully applied in important areas of science like chemistry, materials science, and climate science

Researchers used DeepMind’s AlphaFold to predict the structures of more than 200 million proteins from roughly 1 million species, covering almost every known protein on the planet! Although not all of these predictions resulting from AlphaFold’s open-access Protein Structure Database will be accurate, this is a massive step forward for the field of protein structure prediction.

However, we’re not seeing the same level of impact across other areas of science. That’s because we’re under-investing in the infrastructure needed to apply AI at scale—things like open datasets, shared benchmarks, and collaborations between experimental and computational researchers.

The question that science agencies and different research communities should be actively exploring is – what were the pre-conditions for AlphaFold, and are there steps we can take to create those circumstances in other fields?

The Play

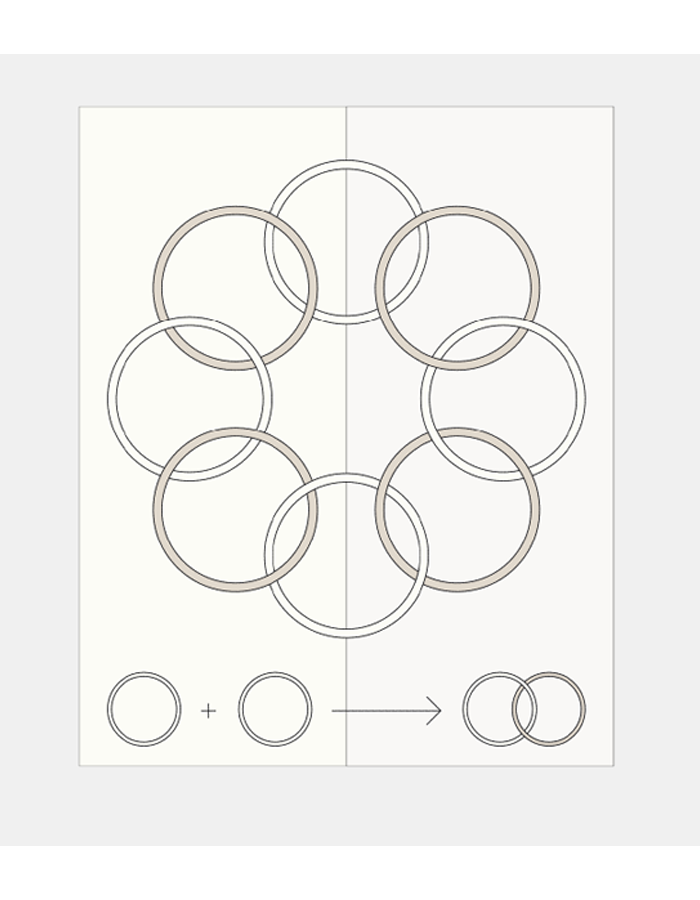

The Common Task Method (CTM) unites research communities to achieve progress on a challenging, well-defined task using standardized datasets and clear success metrics

One framework which explains the success of AlphaFold and how we might replicate that success in other fields is what linguist Mark Liberman calls the “Common Task Method.”

In a CTM, competitors share the common task of training a model on a challenging, standardized dataset with the goal of achieving a better score. They typically have four elements:

Tasks are formally defined with a clear mathematical interpretation

Easily accessible gold-standard datasets are publicly available in a ready-to-go standardized format

One or more quantitative metrics are defined for each task to judge success

State-of-the-art methods are ranked in a continuously updated leaderboard

Computational physicist and synthetic biologist Erika DeBenedictis has proposed adding a fifth component, which is that “new data can be generated on demand.” Erika, who runs competitions such as the 2022 BioAutomation Challenge, argues that creating extensible living datasets has a few advantages. This approach can detect and help prevent overfitting; active learning can be used to improve performance per new datapoint; and datasets can grow organically to a useful size.

According to Renaissance Philanthropy Senior Advisor Mike Frumkin, founder of Google Accelerated Science, a data generation capability is even more valuable than a static dataset. This is because researchers may not immediately pinpoint the right “objective function” needed to develop a model with practical, real-world applications, nor identify the most critical problem to address. Achieving this requires ongoing collaboration and iteration between experimental and computational teams, with a well-balanced approach to latency, throughput, and flexibility.

There are a number of reasons why we are likely to be under-investing in this approach:

These open datasets, benchmarks and competitions are what economists call “public goods.” They benefit the field as a whole, and often do not disproportionately benefit the team that created the dataset. Also, the CTM requires some level of community buy-in. No one researcher can unilaterally define the metrics that a community will use to measure progress.

Researchers don’t spend a lot of time coming up with ideas if they don’t see a clear and reliable path to getting them funded. Researchers ask themselves, “what datasets already exist, or what dataset could I create with a $500,000 – $1 million grant?” They don’t ask the question, “what dataset combined with CTM would have a transformational impact on a given scientific or technological challenge, regardless of the resources that would be required to create it?” If we want more researchers to generate concrete, high-impact ideas, we have to make it worth the time and effort to do so.

Many key datasets (e.g., in fields such as chemistry) are proprietary, and were designed prior to the era of modern machine learning. Although researchers are supposed to include Data Management Plans in their grant applications, these requirements are not enforced, data is often not shared in a way that is useful, and data can be of variable quality and reliability. In addition, large dataset creation may sometimes not be considered academically novel enough to garner high impact publications for researchers.

Creation of sufficiently large datasets may be prohibitively expensive. For example, experts estimate that the cost of recreating the Protein Data Bank would be $15 billion! Science agencies may need to also explore the role that innovation in hardware or new techniques can play in reducing the cost and increasing the uniformity of the data, using, for example, automation, massive parallelism, miniaturization, and multiplexing. A good example of this was NIH’s $1,000 Genome project, led by Jeffrey Schloss.

Case Studies

Private individuals, corporations, and academic institutions have used CTMs to apply AI to solving a wide range of problems from protein structure prediction to reading ancient scrolls

Common Task Methods have been critical to progress in AI/ML. As David Donoho noted in 50 Years of Data Science, the ultimate success of many automatic processes that we now take for granted—Google translate, smartphone touch ID, smartphone voice recognition—derives from the CTF (Common Task Framework) research paradigm, or more specifically its cumulative effect after operating for decades in specific fields. Most importantly for our story: those fields where machine learning has scored successes are essentially those fields where CTF has been applied systematically.

AlphaFold and Protein Structure Prediction in CASP14

Back in 2020, DeepMind’s AlphaFold model achieved a scientific milestone at CASP14, a global competition dedicated to advancing the field of protein structure prediction. Since its creation in 1994 by the Protein Structure Prediction Center, CASP has challenged research teams to accurately predict the 3D structures of proteins using blind targets. These targets are newly solved protein structures that have not yet been made public, making CASP an excellent benchmark for assessing innovation because there is no chance that the assessed protein structures would be in the training datasets of candidate machine learning models.

AlphaFold’s performance at CASP14 was groundbreaking. It reached a median Global Distance Test (GDT) score of 92.4 across all targets, marking the first time a model had predicted protein structures with near-experimental accuracy. This achievement was the result of years of effort and innovation, underpinned by the Protein Data Bank, a publicly funded and open-access repository of 3D molecular structures which provided the high-quality data needed to train AlphaFold’s deep learning algorithms.

AlphaFold has transformed the study of structural biology; scientists can now predict protein folding without time-consuming and expensive experimental setups, enabling breakthroughs in understanding diseases, designing new enzymes, and developing novel therapeutic strategies. Pharmaceutical companies incorporate AlphaFold into their research workflows to accelerate drug discovery and development. Having beat all other participants in CASP14 by a significant margin, entrants to CASP15 and CASP16 have since expanded upon the deep learning-based approach that DeepMind pioneered.

The Vesuvius Challenge

Launched in 2023 by Brent Seales, Nat Friedman, and Daniel Gross, the Vesuvius Challenge offered over $1 million in prize money to “resurrect an ancient library from the ashes of a volcano.” The challenge was to “use computer vision, machine learning, and hard work” to decipher an ancient papyrus scroll.

The scroll is one of 800 that make up the Herculaneum papyri, carbonized in 79 C.E. by the eruption of Mount Vesuvius. They could unlock significant discoveries, as they constitute the only ancient library known from the classical world. Researchers found the scrolls in the 18th century but could not read them because unrolling them causes them to disintegrate.

For this challenge, the organizers released high-resolution CT scans of the scrolls and invited people from all over the world to decipher at least 85 percent of four passages on the scroll. Together, Youssef Nader, Julian Schilliger, and Luke Farritor, trained machine learning algorithms to decipher over 2,000 of the text Greek letters and won the grand prize.

While this only represents about five percent of the full scroll’s text, it appears the scroll is a philosophical discussion of life’s pleasures, like food and music. To build on the momentum of the first challenge, there is now a Vesuvius Challenge Stage 2. It offers a prize of $200,000 to the first team to decipher 90 percent of four scrolls that have been scanned.

Risks

Some researchers point to negative sociological impacts associated with “SOTA-chasing,” a single-minded focus on generating a state-of-the-art result. These include reducing the breadth of the type of research that is regarded as legitimate, too much competition and not enough cooperation, and overhyping AI/ML results with claims of “super-human” levels of performance. Also, a researcher who makes a contribution to increasing the size and usefulness of the dataset may not get the same recognition as the researcher who gets a state-of-the-art result.

Some fields that have become overly dominated by incremental improvements in a metric have had to introduce Wild and Crazy Ideas as a separate track in their conferences to create a space for more speculative research directions.

How we can help

If philanthropists and foundations are interested in accelerating the development of an AI application, Renaissance Philanthropy can tap a global network of experts to determine whether:

Harnessing the CTM for that particular application would make sense

There is existing high-quality data, or whether it would need to be created de novo

A critical mass of scientists and engineers would be interested in working on this problem

There are opportunities to lower the cost of generating the data

If there is a compelling case to pursue the CTM, Renaissance Philanthropy can coordinate one or more funders to design and implement a program.

Resources

AI for science: creating a virtuous circle of discovery and innovation (Tom Kalil interview with Federation of American Scientists)

Reproducible Research and the Common Task Method (Mark Liberman)

Emerging Trends: SOTA-Chasing (Kenneth Ward Church and Valia Kordoni)

You Could Win $1 Million by Deciphering These Ancient Roman Scrolls (Smithsonian Magazine)

Announcing the worldwide Protein Data Bank (Nature Structural & Molecular Biology)

AlphaFold Protein Structure Database (Google DeepMind)

AlphaFold 3 predicts the structure and interactions of all of life’s molecules (Google DeepMind)

Accurate structure prediction of biomolecular interactions with AlphaFold 3 (Nature)

AlphaFold: The making of a scientific breakthrough (Google DeepMind on YouTube)